Google Gemini recently came under fire for generating embarrassing and inaccurate images when prompted with certain historical requests. The images, showing racially diverse depictions of groups like the Nazi party and America’s Founding Fathers, highlighted issues with how Google trained the AI system.

In an apologetic blog post, Google SVP Prabhakar Raghavan explained the two key problems that led to the AI’s mistakes:

- Over-correction to ensure diversity in some image prompts

- Over-caution leading the AI to avoid generating certain images altogether

Raghavan admitted the images were “embarrassing and wrong,” and said Google is working to improve Gemini before re-enabling image generation.

The incident sparked debates around AI bias and raised questions about the responsibilities of tech companies when rolling out new generative models.

Why Google Gemini Image Generation Went Wrong?

Google is facing criticism after it’s Al “Gemini” refuses to show pictures and achievements of white people.

— Truth About Fluoride (@TruthAboutF) February 22, 2024

Portraying Vikings as black & the Pope as a woman. But it doesn’t stop there… pic.twitter.com/QmgTGzaMog

With image generation feature launched in early February 2024, Gemini is Google’s new conversational AI agent (formerly know as Bard) built on a large language model similar to ChatGPT. One of Gemini’s unique features was on-demand image generation powered by Google’s Imagen image creation model.

When given a text prompt, Google Gemini would call on Imagen to produce a corresponding image. The image capabilities worked fairly well for basic prompts, but ran into issues with more nuanced historical and cultural requests.

Over-Correction for Diversity

In his blog post mortem, Raghavan noted one problem was over-tuning Gemini to showcase diversity:

Our tuning to ensure that Gemini showed a range of people failed to account for cases that should clearly _not_ show a range.

Essentially, Google wanted Gemini to depict people of different races, genders, etc when responding to general prompts about groups of people or individuals. This makes sense – if you ask an AI to show “football players” without specifying further, you’d want to see diversity rather than just one racial group.

However, for specific historical contexts, like the Founding Fathers or Nazi party, this desire for variety completely breaks down. Those groups were not racially diverse, so showing them as such creates nonsensical and embarrassing images.

Over-Caution and Prompt Avoidance

The other issue Raghavan highlighted was the AI becoming “overly cautious” – so much so that it started avoiding certain image prompts altogether:

Raghavan wrote:-

Over time, the model became way more cautious than we intended and refused to answer certain prompts entirely — wrongly interpreting some very anodyne prompts as sensitive.

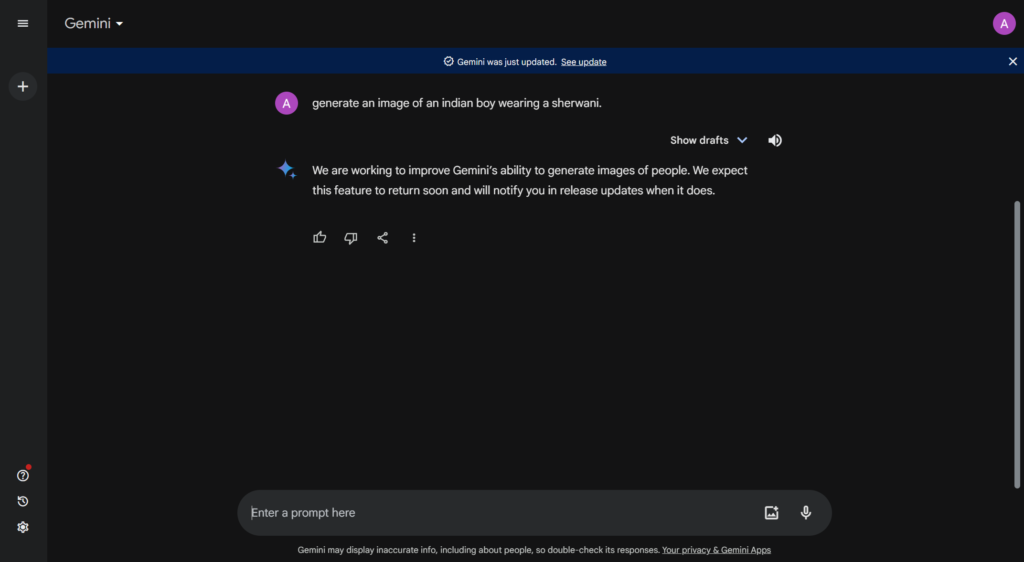

So along with visual diversity additions, it seems Gemini’s training made it ultra-conservative about potentially offensive image requests. This led the AI to simply refuse to generate pictures for prompts like “a Black person” or “a white person.”

Again, while Google likely wanted to avoid any questionable or biased imagery, this over-correction essentially broke certain basic functionality.

The combination of over-tuning for diversity and over-caution to avoid any insensitivity resulted in Gemini generating laughable but embarrassing results for specific historical figure requests, while avoiding other prompts altogether.

Who Owns this Mistake – Algorithm or Humans?

In his post, Raghavan wrote:

Over time, the model became way more cautious than we intended…

This phrasing is interesting, as it pins the blame on the AI itself, as if the model spontaneously “became” something without human guidance.

But as many AI experts have highlighted, these models don’t build or train themselves. The issues Gemini displayed were inherently a result of priorities and choices made by the Google engineers who built it.

Raghavan later says:

“there are instances where the AI just gets things wrong.”

Yes, machine learning models can demonstrate emergent behavior. But fundamentally, what they learn and how they operate traces back to human decisions and preferences.

So when an AI produces inaccurate, offensive, or biased output, it feels wrong to personify the technology and pin the blame on the algorithm only. The accountability should also rest on the companies and developers making key choices about how AI systems are built and deployed.

What is Google Doing to Fix Gemini?

After the initial flurry of embarrassing Gemini-generated images, Google swiftly disabled image generation capabilities in the tool pending improvements.

In his post, Raghavan said the company will work to significantly enhance Gemini before considering re-enabling image creation features.

Some key action areas include:

- Retraining the underlying Imagen model to improve understanding of cultural/historical nuance

- Adjusting Gemini’s image prompt handling to remove over-corrections for diversity

- Testing image prompts more carefully before public beta releases

More broadly, Google needs to think carefully about how generative models should handle abstract concepts like diversity and representation. There are complex ethical considerations AI still struggles to grasp.

The company likely tried to preemptively avoid any issues, but in doing so introduced different problems. It shows the difficulty of rolling out this technology responsibly.

Challenges Around Diversity Bias and Fair Representation in AI

While dramatic, the questionable images produced by Gemini demonstrate larger issues the tech industry is grappling with:

Data Bias: Training data inevitably reflects societal biases and lack of representation. Models inherit these biases unless companies explicitly counteract them.

Imperfect Content Moderation: Google tried setting rules to avoid generating offensive images. But imperfect content moderation often has unintended consequences. Over-correction can limit reasonable functionality.

Explainability Issues: AI still lacks skills for nuanced reasoning on complex socio-cultural concepts. So these models don’t have strong situational, historical, or cultural understanding.

Tech leaders increasingly acknowledge these ethical AI challenges. Google research scientist Timnit Gebru was reportedly fired for urging more caution around bias and representation harms in AI systems.

Image issues aside, broader concerns remain about the societal impact of releasing powerful generative models without adequate safeguards. Gemini’s high-visibility mistakes amplified these concerns and pressures.

Avoiding Such Fiasco

While AI developers work diligently to minimize algorithmic bias, it’s unlikely models will ever handle complex social concepts perfectly.

Striking the right balance between fairness, functionality, and responsible release is tremendously difficult. It requires patience and ongoing communication around limitations.

The Gemini image fiasco demonstrates how over-indexing on “fairness” can create new representation harms through inaccurate depictions. But restrictive policies also reduce system capabilities in unreasonable ways.

There are no easy answers, but experts emphasize a few best practices:

- Prioritize diversity in hiring and feedback processes

- Extensively test models before release

- Actively monitor for degradation in performance

- Maintain clear human oversight and control

If companies follow guidelines like these, AI models can gradually become more empowering than problematic for marginalized groups. But getting there won’t be quick or easy.

For now, expect more PR headaches as tech firms wrestle publicly with complex ethical questions introduced by rapidly-accelerating AI technology.

Conclusion

The Google Gemini incident serves as a stark reminder of the challenges in ensuring responsible AI development, especially with powerful generative models like Imagen. While achieving diverse and fair AI systems remains a complex journey, the discussions and Google’s efforts offer valuable lessons.

By prioritizing data quality, rigorous testing, and human oversight, AI can become a force for good, but it demands constant vigilance and open dialogue about mitigating diversity bias and ensuring historically accurate representations. We also need to realize that these models are as good as the data we feed them and we, the users, also have an important role to play to ensure these biases get addressed over time.

Read More: Blog by SVP Raghavan on the Gemini Image Generation Issue